Moisture Measurement Benefit in Feed and Grain

Feed and grain producers will be familiar with the benefits of being able to control moisture during the various stages of the production process. Monitoring the moisture in the raw materials, controlling the water addition during the mixing/blending process and finally checking the moisture in the final product will enable substantial savings to be made. Product yields may be maximised, ingredients efficiently utilised, energy consumption reduced and a quality finished product ensured.

For these reasons, it is becoming more common for producers to start considering installing moisture control systems and they, therefore, need to look for solutions that offer the best accuracy and flexibility at the most competitive price.

There is an increasingly wide choice of moisture measurement systems available that all claim to have the best and latest technology, but how does the producer, whose area of expertise lies in other technologies know which system to choose?

There are 5 main technologies available on the market, although their degree of accuracy and their ability to solve the problem varies considerably. There is the option of resistive, capacitive, infrared, nuclear or microwave techniques. The first four all have their limitations; resistive and capacitive are susceptible to impurities that may lead to erroneous results; Infrared tends to be relatively expensive and can be affected by dust and vapour and therefore typically lends itself to dry, clean, controlled environments; Nuclear systems are very expensive, and although very accurate in certain applications can be difficult to maintain. However, microwave sensors are not affected by dust, vapour or colour and have proved overall to be the best option in terms of ease of use, reliability, accuracy and cost-effectiveness, usually covering the capital expenditure in a matter of months.

Having settled on the microwave measurement method, choosing the right sensor technology now starts to become more involved. There are microwave sensors that are purely analogue, those that are analogue but output their results in a digital format, and those that actually use a digital measuring technique.

Measurement Technique

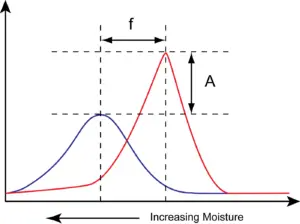

There are various methods of using microwaves to measure moisture. One of the most common methods used is the resonant cavity technique. In the past, an analogue microwave sensor would measure moisture through a combination of frequency shift (the left to right movement of the response) and amplitude attenuation (the change in the height of the response) of the resonator, see figure 1. This combination was measured as a single analogue response and therefore frequency shift and attenuation could not be separated.

In the 1980’s, Hydronix, a leading manufacturer of microwave moisture measurement systems introduced the world’s first digital microwave sensor. This broke new ground, for the first time enabling the frequency shift component to be accurately measured using digital techniques. This development resulted in two significant improvements in the sensors, an improvement in accuracy and also a very significant extension of the moisture range for which the sensor would give a true linear response as moisture levels increased.

Every material will have a different effect on the microwave field generated by the sensor. As moisture increases the response will shift and reduce in amplitude.

Figure 1: Moisture causes a change in the dielectric property of a material resulting in a frequency shift and amplitude attenuation

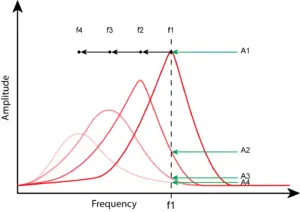

Less advanced sensors simply measure a change in amplitude at a fixed frequency. Measuring across a custom frequency range gives more accurate results than those sensors that work within the confines of a single frequency using an open band such as those used by Burglar Alarm Systems or Wi-Fi (433MHz or 2.4GHz). The components required to manufacture a sensor that measures a frequency shift as opposed to a change in amplitude at a fixed frequency are more complex but provide a superior result. The reason for this is demonstrated in figures 2 and 3.

Figure 2 demonstrates the difference between a digital multi-frequency measurement and an analogue measurement at a fixed frequency (f1) of just the change in amplitude.

As the moisture increases the frequency shifts from f1 to f2, f3 and then f4. The shift between each is similar in magnitude. A sensor with a digital measurement technique will continually scan the frequency response and will track the equal changes in frequency as the material becomes wetter.

For the same moisture changes, a single frequency sensor simply measuring the change in the amplitude at frequency f1 will measure the changes from A1 to A2, A3 and then A4. It can be seen that the sensor progressively loses the ability to register a change in reading as the material becomes wetter. Typically, a good analogue sensor will lose the ability to register additional changes in moisture from about 12% onwards.

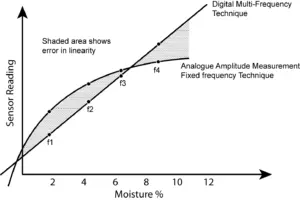

This not only affects the sensors ability to register changes in moisture above this level but it also means that the entire moisture curve lacks linearity as shown in figure 3.

Utilising a non-linear measurement technique requires the sensor reading to undergo significant mathematical manipulation in order to output what appears to be a linear response to changes in moisture.

A digital multi-frequency measurement has the ability to measure from 0% moisture through to material saturation while maintaining sensitivity and precision across the full working range. This also highlights a key difference between an analogue sensor that claims to be digital because it can process the signal and output a seemingly linear measurement and a sensor that uses a digital microwave moisture measurement technique that is inherently linear. The latter will overlay the linear response with subsequent processing to fine-tune the curve and incorporate features such as on-board signal smoothing, alarms and averaging functions.

Accuracy Requires Calibration

Calibration is the process of defining the relationship between the change in the sensor response with the change in the moisture content of the material. This is required because each material will have its own electrical properties (dielectric properties) which effect the microwave field differently.

A good sensor should only require calibrating once for a given material. A poor quality sensor will require continual recalibrating due to errors. When well-calibrated, an accurate and temperature stable moisture sensor should glide up and down the linear moisture line all year round.

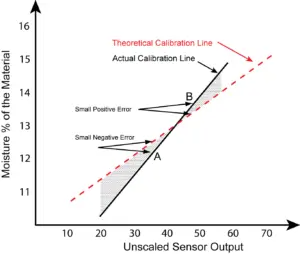

However, a good sensor that is poorly calibrated or an unreliable sensor that is well-calibrated may, for a short period perform well when operating at or around the moisture range to which it was calibrated. As the sensor moves away from this moisture range the reading will exhibit an ever-increasing error. The same error will also exist for a sensor with an underlying non-linear measurement technique that is well-calibrated.

For the demanding end-user, even a small calibration error can lead to large errors as the moisture varies even with an accurate sensor as shown in figure 4.

As appealing as it may be, leading manufacturers would agree that any claim made by a sensor manufacturer that their equipment does not require calibrating will lead to sub-optimal results in the field regardless of technical claims concerning the measurement technique of the sensor.

A well-calibrated sensor that operates with a different calibration slope with for example wheat and barley is likely to be more accurate than a sensor that claims one calibration fits all.

Figure 4 shows the increasing inaccuracy arising from either a very small calibration error or the assumption of the gradient of the calibration slope. At the point of calibration, between 12.5% and 13.5% moisture the sensor is reasonably accurate but as the working moisture range moves away from this range, perhaps with a change of material from a different supplier, the error will progressively increase shown by the grey area which is the difference between the theoretically correct calibration and the one in use.

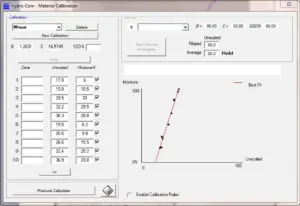

It is usual for moisture sensors measuring in the silos to be calibrated to an absolute moisture percentage. Hydronix always recommends that the best and most failsafe method for calibrating a sensor is to use a multi-point technique such as that used in its free software, Hydro-Com, see figure 5.

Advances in Technology

More advanced microwave moisture sensors, such as those manufactured by Hydronix now provide the user with the ability to choose between different measurement modes, which is of particular interest to feed and grain producers. Different organic materials have their own distinct dielectric property behaviour and will therefore respond differently to each of the underlying measurement modes. The best mode may now be selected for the material and the application to best account for the temperature range, precision required and bulk density changes over time.

The new measurement modes were introduced into the Hydronix Hydro-Mix XT sensor for mixers and conveyors during 2010 and proved to be extremely successful in the feed and grain industry providing producers with more accurate results than ever before.

This new technology was then introduced in 2011 into the new generation of Hydro-Probe XT sensor. Typically installed underneath silos or on conveyors, the new Hydro-Probe XT has proved to be even more successful than its predecessor. Eliminating the need for continual and time consuming manual moisture tests, the Hydro-Probe XT provides an instant and continual moisture level directly to the control system. This allows driers to be adjusted immediately, or the correct amount of additives, such as mould inhibitors to be added. For a relatively small capital outlay, both labour and energy costs, as well as the amount of material spoilt, are reduced, potentially saving the producer many 10’s of thousands of pounds over the year.

To conclude

There are a variety of moisture measurement sensors available on the market, all of which claim to provide the best and most accurate results. It is well known that the microwave measurement technique provides the best results in the feed and grain industry. When choosing a sensor, you should ensure that it is fully temperature stable and completely linear over the working range at which you wish to operate. A digital sensor utilising an underlying measurement technique that is capable of maintaining linearity from the absorption point (also known as S,S.D) to complete saturation by definition will give excellent accuracy limited only by the accuracy of the calibration.